Current position

Naoto Yokoya is a professor with the Department of Complexity Science and Engineering, the Department of Computer Science, and the Department of Information Science at the University of Tokyo, co-running Machine Learning and Statistical Data Analysis Laboratory. He is the team director of the Geoinformatics Team at the RIKEN Center for Advanced Intelligence Project (AIP).

His research interests include image processing, data fusion, and machine learning for understanding remote sensing images, with applications to disaster management and environmental monitoring.

He is an associate editor of IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), IEEE Transactions on Geoscience and Remote Sensing (TGRS), and ISPRS Journal of Photogrammetry and Remote Sensing (P&RS).

Featured publications

|

Z. Liu, F. Liu, W. Xuan, and N. Yokoya, ”LandCraft: Designing the structured 3D landscapes via text guidance,” Proc. AAAI, 2026. PDF Quick Abstract Abstract: Modeling large-scale landscapes is a foundational yet time-consuming task in many 3D applications, typically requiring substantial expertise. Recently, Text-to-3D techniques have emerged as a promising, beginner-friendly prototyping approach for generating 3D content from textual input. However, existing methods either produce unusable, problematic geometries, or fail to fully capture the user’s complex intent from the input text—making it difficult to generate high-quality landscape assets with controllable spatial and geographic features. In this paper, we present LandCraft, a novel AI-assisted authoring tool that enables the rapid creation of high-quality landscape scenes based on user descriptions. Our system employs a coarse-to-fine generation process: Initially, large language and deep generative models concretize textual ideas into abstract representations that capture essential landscape features, such as spatial and geographic characteristics. Then, we leverage a comprehensive procedural generation module to synthesize the detailed, structurally consistent 3D landscapes based on these inferred representations. Our LandCraft can effectively generate production-ready 3D scene assets that can be seamlessly exported to external game engines or modeling software, enabling immediate practical use. |

|

J. Wang, W. Xuan, H. Qi, Z. Liu, K. Liu, Y. Wu, H. Chen, J. Song, J. Xia, Z. Zheng, and N. Yokoya, ”DisasterM3: A remote sensing vision-language dataset for disaster damage assessment and response,” Proc. NeurIPS, 2025. PDF Quick Abstract Abstract: Large vision-language models (VLMs) have made great achievements in Earth vision. However, complex disaster scenes with diverse disaster types, geographic regions, and satellite sensors have posed new challenges for VLM applications. To fill this gap, we curate a remote sensing vision-language dataset (DisasterM3) for global-scale disaster assessment and response. DisasterM3 includes 26,988 bi-temporal satellite images and 123k instruction pairs across 5 continents, with three characteristics: 1) Multi-hazard: DisasterM3 involves 36 historical disaster events with significant impacts, which are categorized into 10 common natural and man-made disasters. 2)Multi-sensor: Extreme weather during disasters often hinders optical sensor imaging, making it necessary to combine Synthetic Aperture Radar (SAR) imagery for post-disaster scenes. 3) Multi-task: Based on real-world scenarios, DisasterM3 includes 9 disaster-related visual perception and reasoning tasks, harnessing the full potential of VLM's reasoning ability with progressing from disaster-bearing body recognition to structural damage assessment and object relational reasoning, culminating in the generation of long-form disaster reports. We extensively evaluated 14 generic and remote sensing VLMs on our benchmark, revealing that state-of-the-art models struggle with the disaster tasks, largely due to the lack of a disaster-specific corpus, cross-sensor gap, and damage object counting insensitivity. Focusing on these issues, we fine-tune four VLMs using our dataset and achieve stable improvements across all tasks, with robust cross-sensor and cross-disaster generalization capabilities. |

|

W. Xuan, J. Wang, H. Qi, Z. Chen, Z. Zheng, Y. Zhong, J. Xia, and N. Yokoya, ”DynamicVL: Benchmarking multimodal large language models for dynamic city understanding,” Proc. NeurIPS, 2025. PDF Quick Abstract Abstract: Multimodal large language models have demonstrated remarkable capabilities in visual understanding, but their application to long-term Earth observation analysis remains limited, primarily focusing on single-temporal or bi-temporal imagery. To address this gap, we introduce DVL-Suite, a comprehensive framework for analyzing long-term urban dynamics through remote sensing imagery. Our suite comprises 15,063 high-resolution (1.0m) multi-temporal images spanning 42 megacities in the U.S. from 2005 to 2023, organized into two components: DVL-Bench and DVL-Instruct. The DVL-Bench includes seven urban understanding tasks, from fundamental change detection (pixel-level) to quantitative analyses (regional-level) and comprehensive urban narratives (scene-level), capturing diverse urban dynamics including expansion/transformation patterns, disaster assessment, and environmental challenges. We evaluate 17 state-of-the-art multimodal large language models and reveal their limitations in long-term temporal understanding and quantitative analysis. These challenges motivate the creation of DVL-Instruct, a specialized instruction-tuning dataset designed to enhance models' capabilities in multi-temporal Earth observation. Building upon this dataset, we develop DVLChat, a baseline model capable of both image-level question-answering and pixel-level segmentation, facilitating a comprehensive understanding of city dynamics through language interactions. |

|

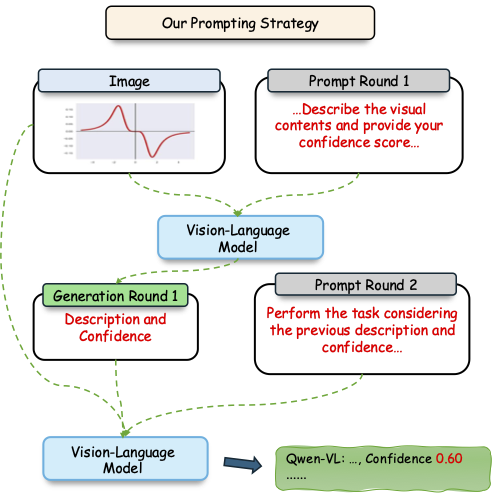

W. Xuan, Q. Zeng, H. Qi, J. Wang, and N. Yokoya, ”Seeing is believing, but how much? A comprehensive analysis of verbalized calibration in vision-language models,” Proc. EMNLP (oral), 2025. PDF Quick Abstract Abstract: Uncertainty quantification is essential for assessing the reliability and trustworthiness of modern AI systems. Among existing approaches, verbalized uncertainty, where models express their confidence through natural language, has emerged as a lightweight and interpretable solution in large language models (LLMs). However, its effectiveness in vision-language models (VLMs) remains insufficiently studied. In this work, we conduct a comprehensive evaluation of verbalized confidence in VLMs, spanning three model categories, four task domains, and three evaluation scenarios. Our results show that current VLMs often display notable miscalibration across diverse tasks and settings. Notably, visual reasoning models (i.e., thinking with images) consistently exhibit better calibration, suggesting that modality-specific reasoning is critical for reliable uncertainty estimation. To further address calibration challenges, we introduce Visual Confidence-Aware Prompting, a two-stage prompting strategy that improves confidence alignment in multimodal settings. Overall, our study highlights the inherent miscalibration in VLMs across modalities. More broadly, our findings underscore the fundamental importance of modality alignment and model faithfulness in advancing reliable multimodal systems. |

|

W. Gan, F. Liu, H. Xu, and N. Yokoya, ”GaussianOcc: Fully self-supervised and efficient 3D occupancy estimation with Gaussian splatting,” Proc. ICCV, 2025. PDF Code Quick Abstract Abstract: We introduce GaussianOcc, a systematic method that investigates Gaussian splatting for fully self-supervised and efficient 3D occupancy estimation in surround views. First, traditional methods for self-supervised 3D occupancy estimation still require ground truth 6D ego pose from sensors during training. To address this limitation, we propose Gaussian Splatting for Projection (GSP) module to provide accurate scale information for fully self-supervised training from adjacent view projection. Additionally, existing methods rely on volume rendering for final 3D voxel representation learning using 2D signals (depth maps and semantic maps), which is time-consuming and less effective. We propose Gaussian Splatting from Voxel space (GSV) to leverage the fast rendering properties of Gaussian splatting. As a result, the proposed GaussianOcc method enables fully self-supervised (no ground-truth ego pose) 3D occupancy estimation in competitive performance with low computational cost (2.7 times faster in training and 5 times faster in rendering). The relevant code is available here. |

|

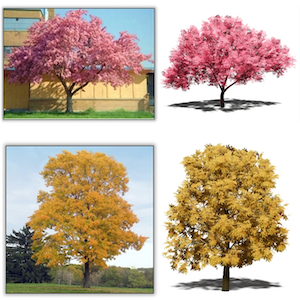

Z. Liu, Z. Cheng, and N. Yokoya, ”Neural hierarchical decomposition for single image plant modeling,” Proc. CVPR, 2025. Project Page PDF Code Quick Abstract Abstract: Obtaining high-quality, practically usable 3D models of biological plants remains a significant challenge in computer vision and graphics. In this paper, we present a novel method for generating realistic 3D plant models from single-view photographs. Our approach employs a neural decomposition technique to learn a lightweight hierarchical box representation from the image, effectively capturing the structures and botanical features of plants. Then, this representation can be subsequently refined through a shape-guided parametric modeling module to produce complete 3D plant models. By combining hierarchical learning and parametric modeling, our method generates structured 3D plant assets with fine geometric details. Notably, through learning the decomposition in different levels of detail, our method can adapt to two distinct plant categories: outdoor trees and houseplants, each with unique appearance features. Within the scope of plant modeling, our method is the first comprehensive solution capable of reconstructing both plant categories from single-view images. |

|

J. Song, H. Chen, W. Xuan, J. Xia, and N. Yokoya, ”SynRS3D: A synthetic dataset for global 3D semantic understanding from monocular remote sensing imagery,” Proc. NeurIPS (spotlight), 2024. Project Page PDF Code Quick Abstract Abstract: Global semantic 3D understanding from single-view high-resolution remote sensing (RS) imagery is crucial for Earth Observation (EO). However, this task faces significant challenges due to the high costs of annotations and data collection, as well as geographically restricted data availability. To address these challenges, synthetic data offer a promising solution by being easily accessible and thus enabling the provision of large and diverse datasets. We develop a specialized synthetic data generation pipeline for EO and introduce SynRS3D, the largest synthetic RS 3D dataset. SynRS3D comprises 69,667 high-resolution optical images that cover six different city styles worldwide and feature eight land cover types, precise height information, and building change masks. To further enhance its utility, we develop a novel multi-task unsupervised domain adaptation (UDA) method, RS3DAda, coupled with our synthetic dataset, which facilitates the RS-specific transition from synthetic to real scenarios for land cover mapping and height estimation tasks, ultimately enabling global monocular 3D semantic understanding based on synthetic data. Extensive experiments on various real-world datasets demonstrate the adaptability and effectiveness of our synthetic dataset and proposed RS3DAda method. SynRS3D and related codes will be available. |

Education

| 2013 Mar. | D.Eng. | Department of Aeronautics and Astronautics | The University of Tokyo | Japan |

| 2010 Sep. | M.Eng. | Department of Aeronautics and Astronautics | The University of Tokyo | Japan |

| 2008 Mar. | B.Eng. | Department of Aeronautics and Astronautics | The University of Tokyo | Japan |

Experience

| 2025 Apr | - | present | Professor | The University of Tokyo | Japan |

| 2023 Apr. | - | present | Team Director | RIKEN | Japan |

| 2022 Dec | - | 2025 Mar. | Associate Professor | The University of Tokyo | Japan |

| 2020 May | - | 2022 Nov | Lecturer | The University of Tokyo | Japan |

| 2018 Jan. | - | 2023 Mar. | Unit Leader | RIKEN | Japan |

| 2019 Apr. | - | 2020 Mar. | Visiting Associate Professor | Tokyo University of Agriculture and Technology | Japan |

| 2015 Dec. | - | 2017 Nov. | Alexander von Hunboldt Research Fellow | DLR and TUM | Germany |

| 2013 Jul. | - | 2017 Dec. | Assistant Professor | The University of Tokyo | Japan |

| 2013 Aug. | - | 2014 Jul. | Visiting Scholar | National Food Research Institute (NFRI) | Japan |

| 2012 Apr. | - | 2013 Jun. | JSPS Research Fellow | The University of Tokyo | Japan |

Award

| Funai Academic Award (2024). |

| Young Scientists’ Award, MEXT (2024). |

| Clarivate Highly Cited Researcher in Geosciences (2022, 2023, 2024, 2025). |

| 1st place in the 2017 IEEE GRSS Data Fusion Contest. |

| Alexander von Humboldt research fellowship for postdoctoral researchers (2015). |

| Best presentation award of the Remote Sensing Society of Japan (2011, 2012, 2019). |

Research grants

| 2025 Oct. | - | 2031 Mar. | PI, CRONOS, Japan Science and Technology Agency (JST) |

| 2025 Apr. | - | 2028 Mar. | PI, NEXUS (Networked Exchange, United Strength for Stronger Partnerships between Japan and ASEAN) Japan-Singapore Joint Research, Japan Science and Technology Agency (JST) |

| 2023 Sep. | - | 2028 Mar. | CI, SIP, Development of a Resilient Smart Network System against Natural Disasters, National Research Institute for Earth Science and Disaster Resilience (NIED) |

| 2022 Apr. | - | 2026 Mar. | PI, Grant-in-Aid for Scientific Research B, Japan Society for the Promotion of Science (JSPS) |

| 2021 Apr. | - | 2028 Mar. | PI, FOREST (Fusion Oriented REsearch for disruptive Science and Technology), Japan Science and Technology Agency (JST) |

| 2021 Apr. | - | 2024 Mar. | CI, Grant-in-Aid for Scientific Research B, Japan Society for the Promotion of Science (JSPS) |

| 2019 Apr. | - | 2022 Mar. | CI, Grant-in-Aid for Scientific Research B, Japan Society for the Promotion of Science (JSPS) |

| 2018 Apr. | - | 2021 Mar. | PI, Grant-in-Aid for Young Scientists, Japan Society for the Promotion of Science (JSPS) |

| 2015 Apr. | - | 2018 Mar. | PI, Grant-in-Aid for Young Scientists (B), Japan Society for the Promotion of Science (JSPS) |

| 2015 Jan. | - | 2016 Dec. | PI, Research Grant Program, Kayamori Foundation of Informational Science Advancement |

| 2013 Aug. | - | 2014 Mar. | PI, Adaptable and Seamless Technology Transfer Program through Target-driven R&D (A-STEP), Japan Science and Technology Agency (JST) |

| 2012 Apr. | - | 2013 Jun. | PI, Grant-in-Aid for JSPS Fellows, Japan Society for the Promotion of Science (JSPS) |

Teaching

| Mathematics for Information Science (in Japanese) | The University of Tokyo | since 2020 |

| Computer Vision (in Japanese) | The University of Tokyo | since 2021 |

| Remote Sensing Image Analysis (in English) | The University of Tokyo | since 2021 |

International mobility

| 2015 Sep. | - | 2017 Nov. | Visiting scholar at DLR and TUM, München, Germany. |

| 2011 Oct. | - | 2012 Mar. | Visiting student at the Grenoble Institute of Technology, Grenoble, France. |

Service

| Organizer | IEEE GRSS Data Fusion Contest 2018, 2019, 2020, 2021, 2025 |

| Organizer | OpenEarthMap Few-Shot Challenge @ L3D-IVU CVPR 2024 Workshop |

| Organizer | IJCAI CDCEO Workshop 2022 |

| Organizer | CVPR EarthVision Workshop 2019, 2020, 2021, 2022 |

| Chair & Co-Chair | IEEE GRSS Image Analysis and Data Fusion Technical Committee (2017-2021) |

| Secretary | IEEE GRSS All Japan Joint Chapter (2018-2021) |

| Student Activity & TIE Event Chair | IEEE IGARSS 2019 |

| Program Chair | IEEE WHISPERS 2015 |

Editorial activity

Reviewer

Reviewers for various journals and conferences:

- IEEE Transactions on Geoscience and Remote Sensing

- IEEE Transactions on Image Processing

- IEEE Transactions on Signal Processing

- IEEE Transactions on Computational Imaging

- IEEE Transactions on Pattern Analysis and Machine Intelligence

- IEEE Journal of Selected Topics on Applied Remote Sensing

- IEEE Journal of Selected Topics in Signal Processing

- IEEE Geoscience and Remote Sensing Letters

- IEEE Geoscience and Remote Sensing Magazine

- Proceedings of the IEEE

- ISPRS Journal of Photogrammetry and Remote Sensing

- Remote Sensing

- Remote Sensing of Environment

- International Journal of Remote Sensing

- Pattern Recognition

- Pattern Recognition Letters

- Neurocomputing

- CVPR

- ECCV

- ICCV

- NeurIPS

- AAAI

- WACV